A few days ago, OpenAI released a cutting-edge image generation AI model natively integrated into GPT-4o, a multimodal LLM introduced in May 2024.

X has been filled with a lot of images created by this AI, highlighting its significant impact in recent days.

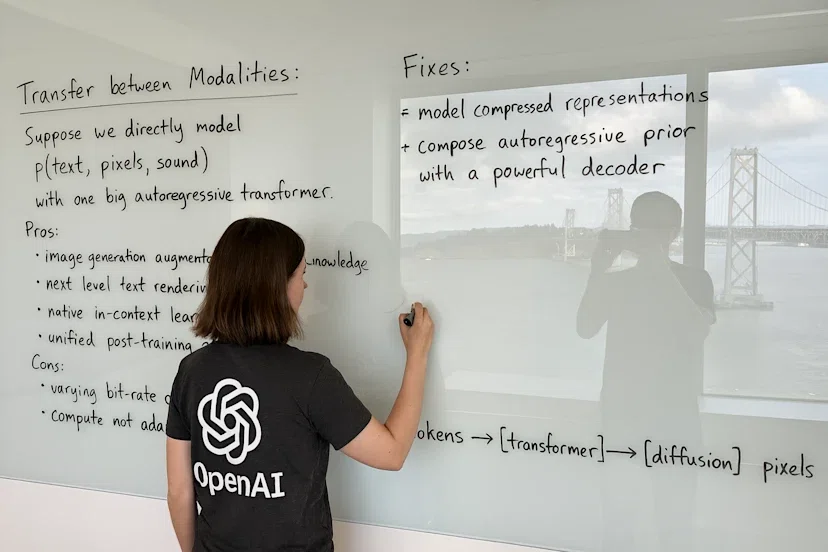

The incredible progress in image generation from DALL·E 3 to today's model is due to the implementation of a completely different AI architecture.

The former was done using "diffusion model", while the latter uses an "Autoregressive model".

Citation from OpenAI is here.

Unlike DALL·E, which operates as a diffusion model, 4o image generation is an autoregressive model natively embedded within ChatGPT.

Addendum to GPT-4o System Card: Native image generation

By implementing an autoregressive model for image generation, similar to text generations, the AI model can generate pixels one by one, leading to higher quality and more precise image generation-including the ability to incorporate text into the image.

GPT-4o can accurately predict the next pixel for an entire image, just like a predicting next word in text, truly making it "multimodal LLM".

So, two keys to the success of this model are:

1: Generating an image by repeatedly inferring each pixel allows the AI to produce text within the image, as well as generate higher-quality and more faithful images.

2: Thanks to the multimodal capabilities of GPT-4o, the AI can understand the user's intent from text or images and translate it into highly accurate and meaningful images.

In conclusions,